A Waymo self-driving car.

A Waymo self-driving car.

The artificial intelligence that guides self-driving cars will need clear moral guidelines on how to make life-or-death decisions. But can we all agree on what those rules should be?

A new study in the journal Nature tried to find out.

In moral philosophy, the Trolley Problem is an intellectual exercise designed to test the bases for ethical choices. It describes a situation in which a person is on a trolley car that has lost its breaks and is hurtling toward pedestrians.

The person in the trolley must choose whether to let the collision happen or switch to another track and collide with a different group of people.

The thought experiment is further complicated by changing who is in each group.

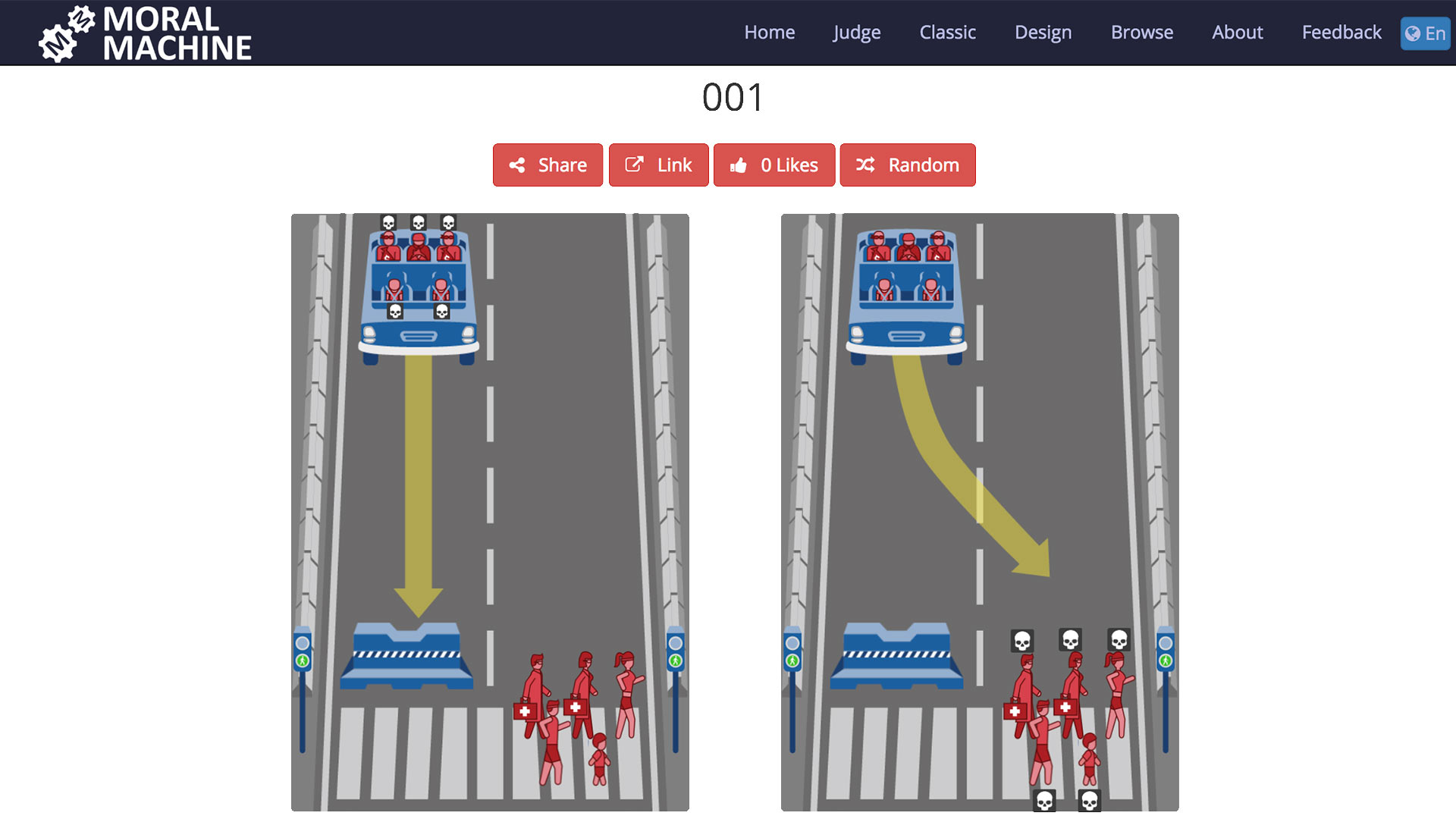

VIEW LARGER A image of the website of the Moral Machine, an MIT-created tool presenting users with moral dilemmas.

VIEW LARGER A image of the website of the Moral Machine, an MIT-created tool presenting users with moral dilemmas.

To see if they could determine a set of moral rules everyone could agree on — culturally if not globally — the researchers created Moral Machine, an online test available to 233 countries. It consists of 13 randomly chosen scenarios in which the user must choose between humans and pets, passengers and pedestrians, children and the elderly, rich and poor, fit and not-so-fit, and men and women.

Moral Machine created a test to see the decisions people would make in a bad scenario involving driverless cars.

"We, also, as humans, face those moral dilemmas in our daily lives, but probably we resolve them without realizing," said lead author Edmond Awad, a postdoctoral associate the Massachusetts Institute of Technology's Media Lab.

Overall, people favored saving humans over pets, children over the elderly and more lives over fewer.

But the study, which was based on almost 40 million geolocated decisions, also found cultural clusters that differed significantly in key areas, such as the relative value of saving young people, elderly people, women, the physically fit and persons of high social status.

Awad said his team intended the results to inform future decision models, not offer guidelines.

"The experts should be the ones who make those normative ethical decisions of what kind of moral principles we want for those machines to have. But they could be informed by what the public wants."

The results suggest an ethics playbook for vehicles run by artificial intelligence could yet succeed. But they also point to the work that yet remains before any set of rules can universally apply.

By submitting your comments, you hereby give AZPM the right to post your comments and potentially use them in any other form of media operated by this institution.